Introduction to Google BigQuery

In this tutorial, we will explore BigQuery’s architecture in detail, covering the separation of storage and compute, columnar data storage and query execution, and its distributed nature that enables scalability.

1. BigQuery Storage and Compute Separation

One of the key features of BigQuery’s architecture is the decoupling of storage and compute resources. This separation allows users to scale each component independently, which offers numerous advantages such as cost efficiency and flexibility.

Benefits of Storage and Compute Separation

For example, imagine a company storing 100 TB of sales data in BigQuery. They don't need to pre-allocate compute resources for this stored data until they perform a query. By dynamically engaging compute power only during queries, the company can manage its data costs more efficiently.

How Does Storage and Compute Separation Work?

BigQuery stores data in Google Cloud Storage in a highly optimized columnar format. It handles the physical storage, data replication, and backup across multiple regions to ensure durability and availability. Compute resources, on the other hand, are dynamically allocated when a query is executed.For example, if a company stores 100 TB of historical sales data in BigQuery, it doesn’t need to allocate compute resources for this data at rest. Instead, compute resources are only engaged when the team queries or analyzes the data. This not only reduces operational costs but also speeds up query execution.

2. Columnar Data Storage and Query Execution

BigQuery employs columnar storage, which allows for highly efficient querying, especially for analytical workloads. Instead of storing data row-by-row (like traditional databases), BigQuery organizes data in columns. This enables fast access to only the columns needed for a query, reducing the amount of data scanned.

Columnar Storage Example:

Imagine querying a large dataset containing millions of records about customer purchases. If the query only needs information about the customer_name and purchase_amount, BigQuery will only scan the relevant columns. This optimizes the query performance and reduces the cost, as the amount of data processed is minimized.

Query Execution Using Dremel:

BigQuery’s query execution engine is powered by Dremel, Google’s distributed query system designed for interactive analysis of large datasets. Dremel enables BigQuery to scan petabytes of data in seconds by distributing the query across many nodes in parallel.

3. Distributed Architecture and Scalability

BigQuery’s distributed architecture allows for horizontal scaling, which means that as the volume of data grows, BigQuery can seamlessly scale by distributing queries across a cluster of machines. This distributed nature ensures that BigQuery can handle vast datasets with high query concurrency while maintaining fast performance.

Distributed Query Processing:

When a query is executed, BigQuery breaks it down into smaller components. These components are then distributed across many worker nodes in parallel. Each worker node processes its part of the query, and the results are aggregated and returned to the user.

Imagine a streaming service like Netflix or Hulu handling millions of users watching content simultaneously. On a normal day, the platform collects data on user behavior—what shows are being watched, user preferences, and playback times. During a big event, like the release of a highly anticipated movie or series finale, the number of users can skyrocket, leading to a massive increase in data.

With BigQuery’s distributed architecture, the platform can run queries on this enormous dataset without slowing down. For instance, if the company wants to analyze real-time viewer trends—like which regions are streaming the new release the most—BigQuery will automatically distribute the query across many machines, processing the data quickly and efficiently. Even as data scales up dramatically, the platform doesn’t have to worry about performance issues because BigQuery’s system can handle large workloads seamlessly, ensuring a smooth experience for users and real-time insights for the business.

Example of Scalability:

Let’s say an e-commerce platform wants to analyze traffic and sales patterns during Black Friday. The platform might experience an exponential increase in data volume, from gigabytes to terabytes or even petabytes. BigQuery’s distributed architecture allows it to scale horizontally, processing large volumes of data without any additional configuration from the user. The system can manage massive spikes in workload without slowing down.

Example

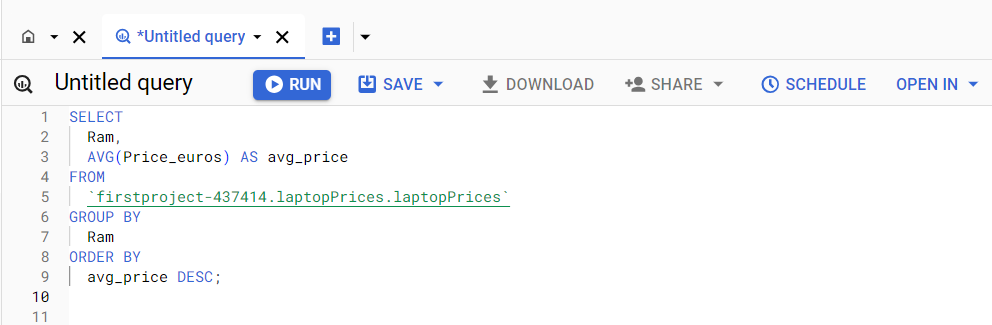

Suppose we are given a dataset of laptop prices , and we want to analyze the average laptop price by RAM size. Here’s how you would write a query in BigQuery-

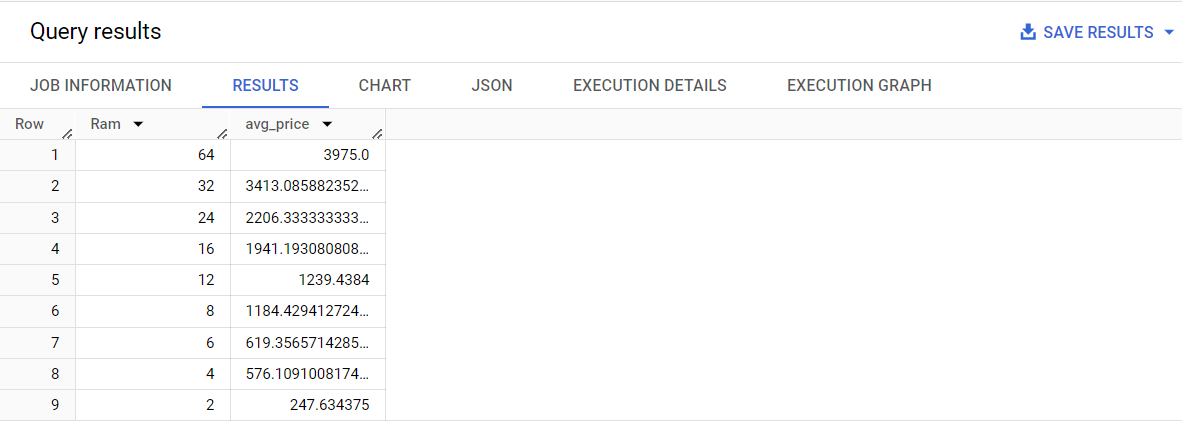

Output

Explanation

In this code, the full reference firstproject-437414.laptopPrices.laptopPrices points to the laptopPrices table within the laptopPrices dataset, stored in the firstproject-437414 Google Cloud project.

This query calculates the average price of laptops based on their RAM size from the laptopPrices table. It groups the results by the Ram column, then computes the average value of Price_euros for each group. Finally, the results are ordered in descending order of the average price.