How to Add a New Column in Spark Scala - WithColumn

Add a New Column in Spark DataFrame - WithColumn

Adding a new column to a DataFrame in Spark using Scala is a fundamental operation that can significantly enhance your data processing capabilities. This tutorial focuses on using the withColumn method to achieve this task efficiently. The withColumn method allows you to add or replace a column in a DataFrame.

Sample Data

| Roll | First Name | Age | Last Name |

|---|---|---|---|

| 1 | Rahul | 30 | Yadav |

| 2 | Sanjay | 67 | gupta |

| 3 | Ranjan | 67 | kumar |

1. Import Required Libraries

First, you need to import the necessary libraries:

import org.apache.spark.sql.{Row, SparkSession}

import org.apache.spark.sql.functions.{col, concat_ws}

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

2. Create Sample DataFrame

val schema = StructType(Array(

StructField("roll", IntegerType, true),

StructField("first_name", StringType, true),

StructField("age", IntegerType, true),

StructField("last_name", StringType, true)

))

// Create the data

val data = Seq(

Row(1, "rahul", 30, "yadav"),

Row(2, "sanjay", 67, "gupta"),

Row(3, "ranjan", 67, "kumar")

)

// Create the DataFrame

val rdd = sparkSession.sparkContext.parallelize(data)

val testDF = sparkSession.createDataFrame(rdd, schema)

3. Use withColumn method

val transformedDF=testDF.withColumn("full_name",concat_ws(" ",col("first_name"),col("last_name")))

4. Complete code

import org.apache.spark.sql.{Row, SparkSession}

import org.apache.spark.sql.functions.{col, concat_ws}

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

object AddColumn {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession

.builder()

.appName("Add new column to spark dataframe")

.master("local")

.getOrCreate()

val schema = StructType(Array(

StructField("roll", IntegerType, true),

StructField("first_name", StringType, true),

StructField("age", IntegerType, true),

StructField("last_name", StringType, true)

))

val data = Seq(

Row(1, "rahul", 30, "yadav"),

Row(2, "sanjay", 67, "gupta"),

Row(3, "ranjan", 67, "kumar")

)

val rdd = sparkSession.sparkContext.parallelize(data)

val testDF = sparkSession.createDataFrame(rdd, schema)

val transformedDF=testDF.withColumn("full_name",concat_ws(" ",

col("first_name"),col("last_name")))

transformedDF.show()

sparkSession.stop()

}

}

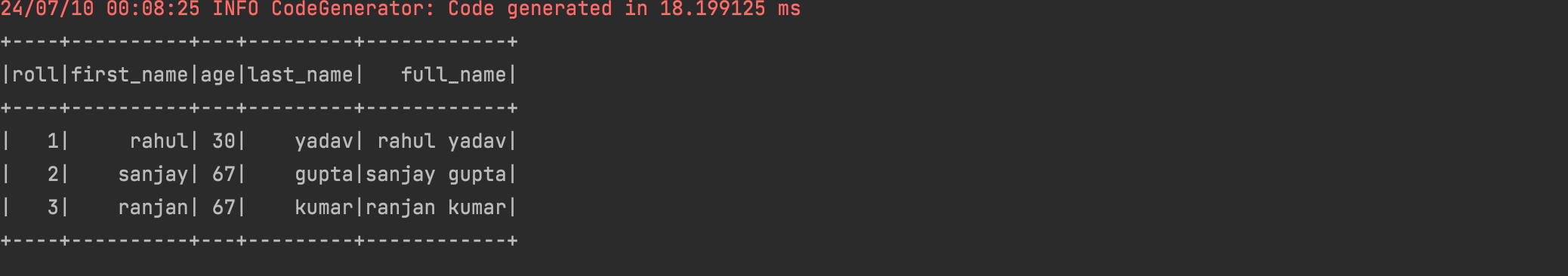

Output