If else condition in scala spark - In SQL Case When

If else condition in Spark Scala - Case When in SQL

In SQL world, very often we write case when statement to deal with conditions. Spark also provides “when function” to deal with multiple conditions.

In this article, will talk about following:

- when

- when otherwise

- when with multiple conditions

Sample Data

| Roll | First Name | Age | Last Name | Gender |

|---|---|---|---|---|

| 1 | Rahul | 18 | Yadav | Male |

| 2 | priya | 27 | gupta | Female |

| 3 | Ranjan | 27 | kumar | Male |

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions.{col, concat_ws, lit, when}

object CaseWhenSparkScala {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession

.builder()

.appName("Case When Spark in Scala with example")

.master("local")

.getOrCreate()

val testDF=sparkSession.read.option("header","true").csv("data/csv/test2.csv")

//to print schema

testDF.printSchema()

val transformedDF=testDF.withColumn("full_name",

when(

//condition

col("gender")===lit("male"),

//value

concat_ws(" ",lit("Mr."),col("first_name"),col("last_name"))

). when(

//condition

col("gender")===lit("female"),

//value

concat_ws(" ",lit("Ms."),col("first_name"),col("last_name"))

).otherwise(

//else part

concat_ws(" ",

lit("Unknown"), col("first_name"),col("last_name"))

)

)

transformedDF.show()

sparkSession.stop()

}

}

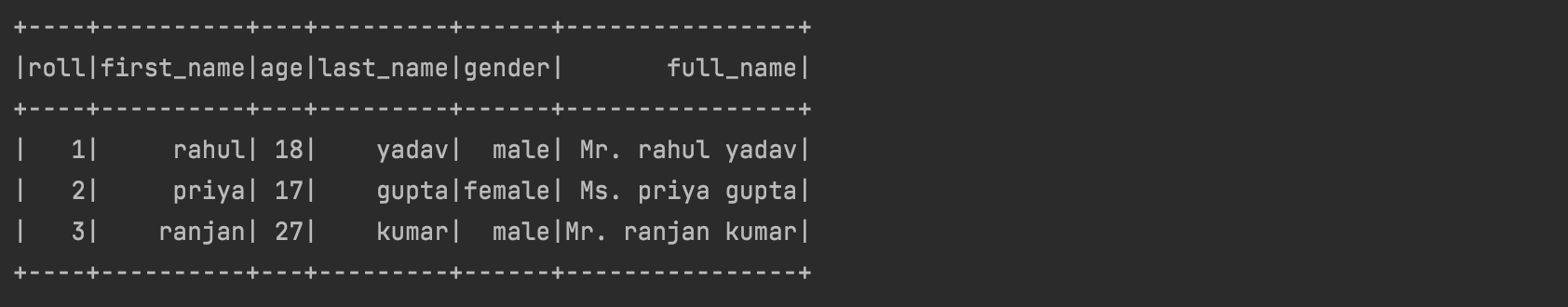

As you can see in the output that one extra column hasb been added

Output: