Introduction to RDD in Scala Spark - Comprehensive Guide

Understanding RDD in Scala Spark

Resilient Distributed Datasets (RDD) are the fundamental data structure of Apache Spark. RDDs are immutable distributed collections of objects. In this comprehensive guide, we will explore the creation, transformation, and operations on RDDs using Scala in Spark.

1. What is an RDD?

An RDD is a distributed collection of elements that can be operated on in parallel. RDDs support two types of operations: transformations and actions. Transformations create a new RDD from an existing one, while actions return a result to the driver program or write it to storage.

2. Creating RDDs

There are multiple ways to create RDDs in Spark:

- From a local collection

- From external storage (HDFS, S3, etc.)

- By transforming an existing RDD

2.1. Creating RDDs from a Local Collection

You can create an RDD from a local collection (such as an array) using the parallelize method:

import org.apache.spark.sql.SparkSession

object RDDCreation {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession

.builder()

.appName("Our First scala spark code")

.master("local")

.getOrCreate()

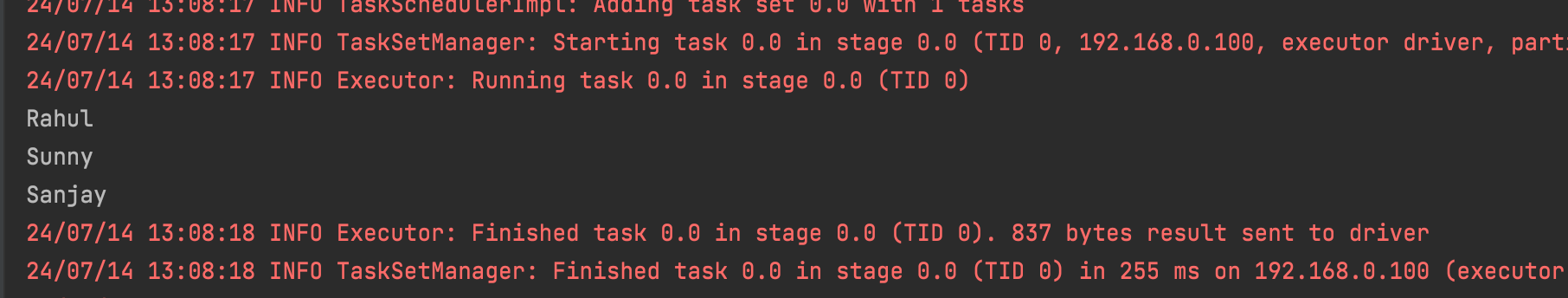

val data: Array[String] = Array("Rahul", "Sunny", "Sanjay")

//RDD

val rdd = sparkSession.sparkContext.parallelize(data)

rdd.foreach(value => println(value))

}

}

2.2. Creating RDDs from External Storage

RDDs can be created from data in external storage systems, such as HDFS, S3, or any Hadoop-supported file system:

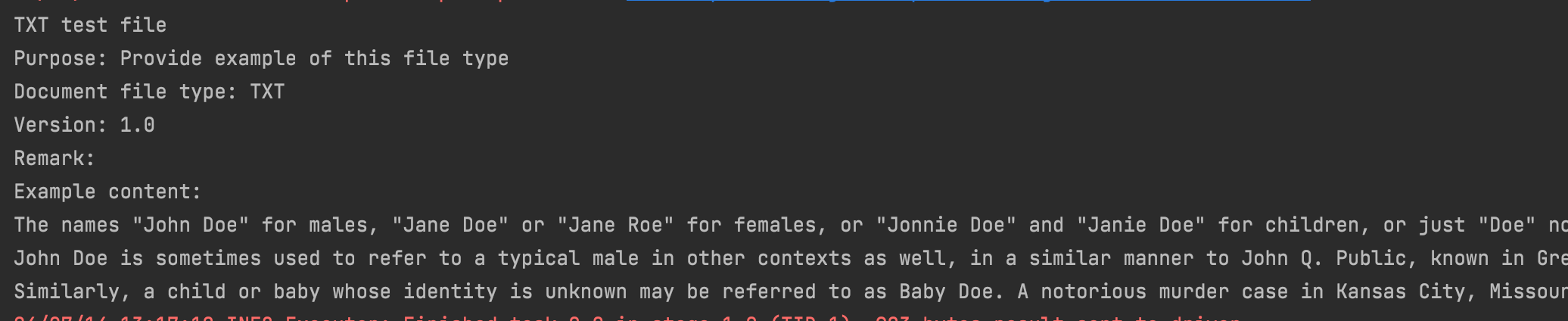

val rawRDD:RDD[String] = sparkSession.sparkContext.textFile("data/text/data.txt")

rawRDD.foreach(value => println(value))

Compete Code

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.SparkSession

object RDDCreation {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession

.builder()

.appName("Our First scala spark code")

.master("local")

.getOrCreate()

val data: Array[String] = Array("Rahul", "Sunny", "Sanjay")

val rdd = sparkSession.sparkContext.parallelize(data)

rdd.foreach(value => println(value))

val rawRDD:RDD[String] = sparkSession.sparkContext.textFile("data/text/data.txt")

rawRDD.foreach(value => println(value))

sparkSession.stop()

}

}