Guide to Apache Spark DataFrame distinct() Method

How to Delete or drop column from a spark dataframe

Deleting a column from a Spark DataFrame in Scala is achieved using methods like drop() or select(), which exclude specified columns from the resulting DataFrame.

Sample Data

| Roll | First Name | Age | Last Name |

|---|---|---|---|

| 1 | Rahul | 30 | Yadav |

| 2 | Sanjay | 20 | gupta |

| 3 | Ranjan | 67 | kumar |

Step 1: Import Required Libraries

First, you need to import the necessary libraries:

import org.apache.spark.sql.{Row, SparkSession}

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

Step 2: Create Sample DataFrame

For demonstration purposes, let's create a sample DataFrame:

val schema = StructType(Array(

StructField("roll", IntegerType, true),

StructField("first_name", StringType, true),

StructField("age", IntegerType, true),

StructField("last_name", StringType, true)

))

val data = Seq(

Row(1, "rahul", 30, "yadav"),

Row(2, "sanjay", 20, "gupta"),

Row(3, "ranjan", 67, "kumar")

)

val rdd = sparkSession.sparkContext.parallelize(data)

val testDF = sparkSession.createDataFrame(rdd, schema)

Step 3: Use drop method to delete single or multiple columns

Using drop:

val transformedDF=testDF.drop(col("roll"))

//to delete/drop multiplecolumn

val transformedDF=testDF.drop("roll","age")

Complete Code

import org.apache.spark.sql.functions.col

import org.apache.spark.sql.{Row, SparkSession}

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

object DroporDeleteColumn {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession

.builder()

.appName("delete or drop column from a spark dataframe")

.master("local")

.getOrCreate()

val schema = StructType(Array(

StructField("roll", IntegerType, true),

StructField("first_name", StringType, true),

StructField("age", IntegerType, true),

StructField("last_name", StringType, true)

))

val data = Seq(

Row(1, "rahul", 30, "yadav"),

Row(2, "sanjay", 20, "gupta"),

Row(3, "ranjan", 67, "kumar"),

)

val rdd = sparkSession.sparkContext.parallelize(data)

val testDF = sparkSession.createDataFrame(rdd, schema)

val transformedDF=testDF.drop(col("roll"))

transformedDF.printSchema()

sparkSession.stop()

}

}

import org.apache.spark.sql.functions.col

import org.apache.spark.sql.{Row, SparkSession}

import org.apache.spark.sql.types.{IntegerType, StringType, StructField, StructType}

object DroporDeleteColumn {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession

.builder()

.appName("delete or drop column from a spark dataframe")

.master("local")

.getOrCreate()

val schema = StructType(Array(

StructField("roll", IntegerType, true),

StructField("first_name", StringType, true),

StructField("age", IntegerType, true),

StructField("last_name", StringType, true)

))

val data = Seq(

Row(1, "rahul", 30, "yadav"),

Row(2, "sanjay", 20, "gupta"),

Row(3, "ranjan", 67, "kumar"),

)

val rdd = sparkSession.sparkContext.parallelize(data)

val testDF = sparkSession.createDataFrame(rdd, schema)

val transformedDF=testDF.drop(col("roll"))

transformedDF.printSchema()

sparkSession.stop()

}

}

That's it! You've successfully applied filter and where conditions to a DataFrame in Spark using Scala.

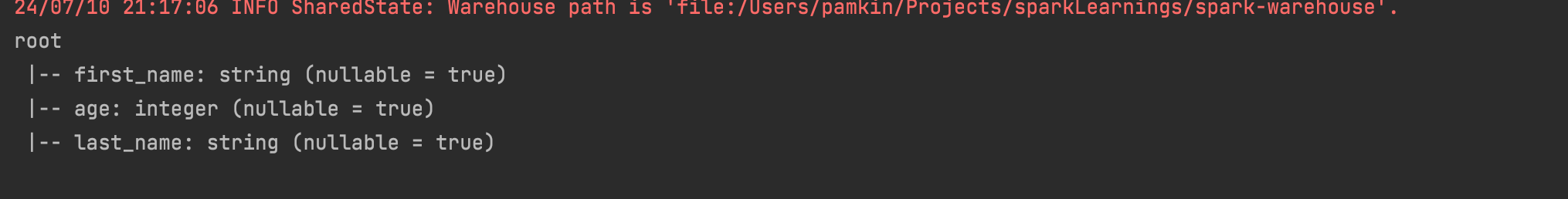

Output