Spark dataframe show all Rows

How to Display DataFrame in Scala Spark with Examples

DataFrames are a crucial component in Spark for data manipulation and analysis. Displaying DataFrames in a readable format helps in understanding and debugging data transformations. Here, we'll demonstrate how to show a DataFrame in Scala Spark.

1. Sample Data

| Movie Name | Review |

|---|---|

| Kalki 2898 AD | Kalki 2898 AD","\"Kalki\" is a cinematic marvel that seamlessly blends mythology with modern storytelling, and Prabhas delivers a performance that is both powerful and captivating. |

| Robot | This is one of the best movies I've ever watched. After 2000 all of Shankar's movies have been either a blockbuster or super hit |

1. Import Libraries

import org.apache.spark.sql.{Row, SparkSession}

import org.apache.spark.sql.types.{DataTypes, IntegerType, StringType, StructField, StructType}

2. Create DataFrame

val schema = StructType(

Array(StructField("Movie Review", StringType, true),StructField("Review", StringType, true))

)

val data = Seq(

Row("Kalki 2898 AD","\"Kalki\" is a cinematic marvel that seamlessly blends mythology with modern storytelling, and Prabhas delivers a performance that is both powerful and captivating"),

Row( "Robot","This is one of the best movies I've ever watched. After 2000 all of Shankar's movies have been either a blockbuster or super hit."),

)

val rdd = sparkSession.sparkContext.parallelize(data)

val testDF = sparkSession.createDataFrame(rdd, schema)

2. Use show to print rows

val schema = StructType(

Array(StructField("Movie Review", StringType, true),StructField("Review", StringType, true))

)

val data = Seq(

Row("Kalki 2898 AD","\"Kalki\" is a cinematic marvel that seamlessly blends mythology with modern storytelling, and Prabhas delivers a performance that is both powerful and captivating"),

Row( "Robot","This is one of the best movies I've ever watched. After 2000 all of Shankar's movies have been either a blockbuster or super hit."),

)

val rdd = sparkSession.sparkContext.parallelize(data)

val testDF = sparkSession.createDataFrame(rdd, schema)

2. Use show to print rows

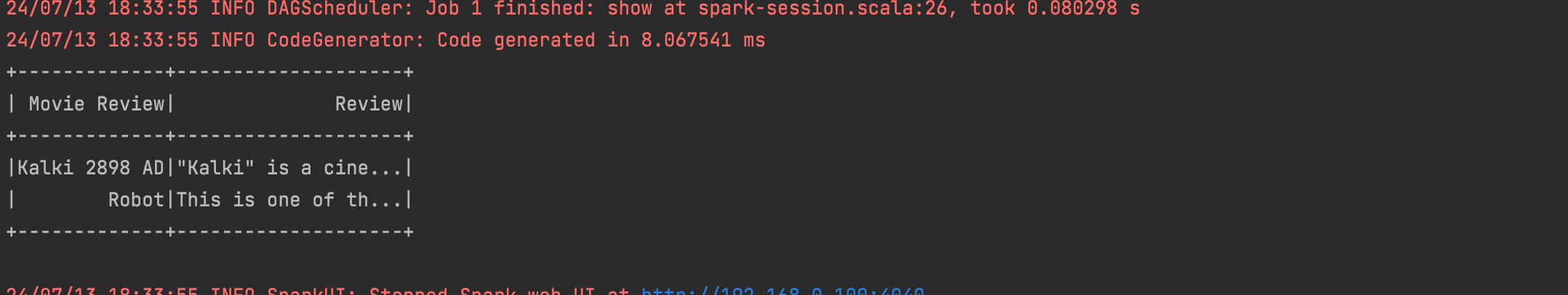

By default show function prints 20 rows

testDF.show()

3. Use show to print n rows

Below statement will print 10 rows

testDF.show(10)

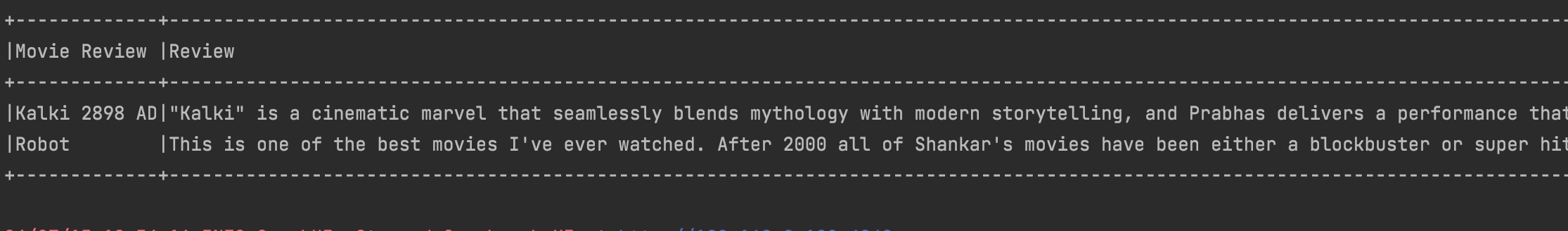

4. Use show with truncate argument

if you use false option then it will not truncate column value its too long

testDF.show(10,false)