Filter and Where Conditions in Spark DataFrame PySpark

Filter and Where Conditions in Spark DataFrame - PySpark

Learn how to use filter and where conditions when working with Spark DataFrames using PySpark. This tutorial will guide you through the process of applying conditional logic to your data filtering, allowing you to retrieve specific subsets of data based on given criteria.

Sample Data

| ID | First Name | Age | Last Name |

|---|---|---|---|

| 101 | Amit | 28 | kumar |

| 102 | Bony | 34 | kumar |

| 103 | chandan | 45 | kumar |

| 104 | Deepak | 23 | kumar |

Step 1: Import Required Libraries

First, you need to import the necessary libraries:

from pyspark.sql import SparkSession from pyspark.sql.functions import col, lit from pyspark.sql.types import IntegerType, StringType, StructField, StructType

Step 2: Create Sample DataFrame

For demonstration purposes, let's create a sample DataFrame:

spark = SparkSession.builder \

.appName("Filter and Where Conditions") \

.master("local") \

.getOrCreate()

schema = StructType([

StructField("ID", IntegerType(), True),

StructField("First_Name", StringType(), True),

StructField("Age", IntegerType(), True),

StructField("Last_Name", StringType(), True)

])

data = [

(101, "Alice", 28, "Smith"),

(102, "Bob", 34, "Johnson"),

(103, "Charlie", 45, "Williams"),

(104, "David", 23, "Brown")

]

df = spark.createDataFrame(data, schema)

df.show()

Step 3: Apply Filter and Where Conditions

Now, let's apply filter and where conditions to the DataFrame:

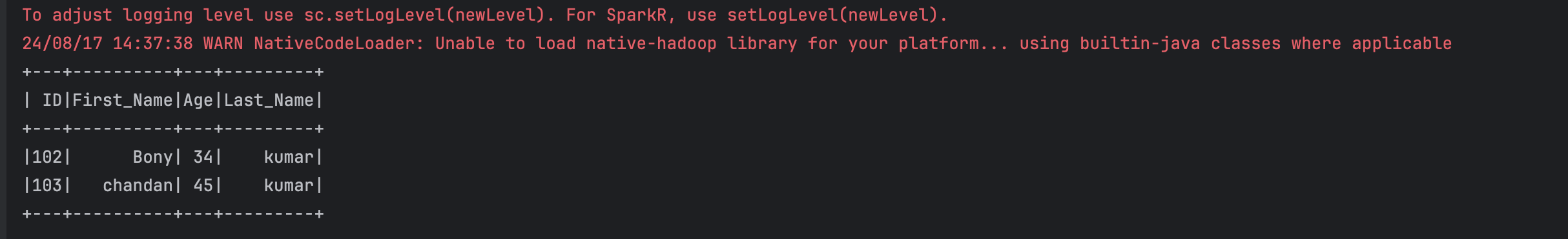

Using Filter:

filtered_df = df.filter(col("Age") > lit(30))

filtered_df.show()

Using Where:

where_df = df.where(col("Age") > lit(30))

where_df.show()

Complete Code

from pyspark.sql import SparkSession

from pyspark.sql.functions import col, lit

from pyspark.sql.types import IntegerType, StringType, StructField, StructType

def main():

spark = SparkSession.builder \

.appName("Filter and Where Conditions") \

.master("local") \

.getOrCreate()

schema = StructType([

StructField("ID", IntegerType(), True),

StructField("First_Name", StringType(), True),

StructField("Age", IntegerType(), True),

StructField("Last_Name", StringType(), True)

])

data = [

(101, "Alice", 28, "Smith"),

(102, "Bob", 34, "Johnson"),

(103, "Charlie", 45, "Williams"),

(104, "David", 23, "Brown")

]

df = spark.createDataFrame(data, schema)

filtered_df = df.filter(col("Age") > lit(30))

filtered_df.show()

where_df = df.where(col("Age") > lit(30))

where_df.show()

spark.stop()

if __name__ == "__main__":

main()

That's it! You've successfully applied filter and where conditions to a DataFrame in Spark using PySpark.

Output