Guide to Apache PySpark DataFrame distinct() Method

Select distinct rows in PySpark DataFrame

The distinct() method in Apache PySpark DataFrame is used to generate a new DataFrame containing only unique rows based on all columns. Here are five key points about distinct():

Sample Data

| Roll | First Name | Age | Last Name |

|---|---|---|---|

| 1 | Arjun | 30 | Singh |

| 2 | Vikram | 67 | Sharma |

| 3 | Amit | 67 | Verma |

| 4 | Vikram | 67 | Sharma |

Step 1: Import Required Libraries

First, you need to import the necessary libraries:

from pyspark.sql import SparkSession from pyspark.sql import Row from pyspark.sql.types import StructType, StructField, IntegerType, StringType

Step 2: Create Sample DataFrame

For demonstration purposes, let's create a sample DataFrame:

# Initialize SparkSession

spark = SparkSession.builder.master("local").appName("distinct in pyspark").getOrCreate()

# Define the schema

schema = StructType([

StructField("roll", IntegerType(), True),

StructField("first_name", StringType(), True),

StructField("age", IntegerType(), True),

StructField("last_name", StringType(), True)

])

# Create the data

data = [

Row(1, "arjun", 30, "singh"),

Row(3, "Vikram", 67, "Sharma"),

Row(4, "Amit", 67, "Verma"),

Row(3, "Vikram", 67, "Sharma"),

]

# Parallelize the data and create DataFrame

rdd = spark.sparkContext.parallelize(data)

testDF = spark.createDataFrame(rdd, schema)

Step 3: Use distinct method

Using distinct:

val transformedDF=testDF.distinct()

Complete Code

from pyspark.sql import SparkSession

from pyspark.sql import Row

from pyspark.sql.types import StructType, StructField, IntegerType, StringType

# Initialize SparkSession

spark = SparkSession.builder.master("local").appName("distinct in pyspark").getOrCreate()

# Define the schema

schema = StructType([

StructField("roll", IntegerType(), True),

StructField("first_name", StringType(), True),

StructField("age", IntegerType(), True),

StructField("last_name", StringType(), True)

])

# Create the data

data = [

Row(1, "arjun", 30, "singh"),

Row(3, "Vikram", 67, "Sharma"),

Row(4, "Amit", 67, "Verma"),

Row(3, "Vikram", 67, "Sharma"),

]

# Parallelize the data and create DataFrame

rdd = spark.sparkContext.parallelize(data)

testDF = spark.createDataFrame(rdd, schema)

transformedDF = testDF.distinct()

# Show the DataFrame

transformedDF.show()

from pyspark.sql import SparkSession

from pyspark.sql import Row

from pyspark.sql.types import StructType, StructField, IntegerType, StringType

# Initialize SparkSession

spark = SparkSession.builder.master("local").appName("distinct in pyspark").getOrCreate()

# Define the schema

schema = StructType([

StructField("roll", IntegerType(), True),

StructField("first_name", StringType(), True),

StructField("age", IntegerType(), True),

StructField("last_name", StringType(), True)

])

# Create the data

data = [

Row(1, "arjun", 30, "singh"),

Row(3, "Vikram", 67, "Sharma"),

Row(4, "Amit", 67, "Verma"),

Row(3, "Vikram", 67, "Sharma"),

]

# Parallelize the data and create DataFrame

rdd = spark.sparkContext.parallelize(data)

testDF = spark.createDataFrame(rdd, schema)

transformedDF = testDF.distinct()

# Show the DataFrame

transformedDF.show()

That's it! You've successfully applied filter and where conditions to a DataFrame in PySpark using .

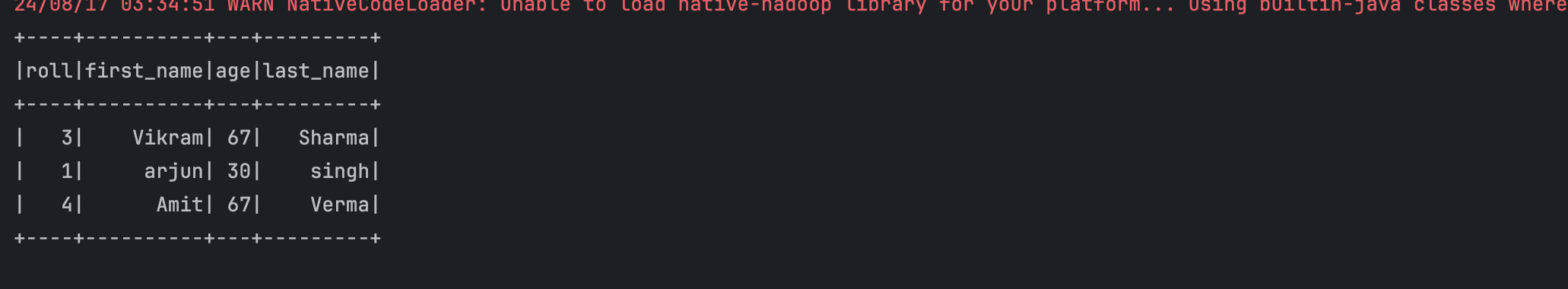

Output