How to Use orderBy in PySpark - Sorting DataFrames

Using orderBy or Sort in PySpark to Sort DataFrames

Sorting data is a crucial step in data processing and analysis. In Apache Spark, you can use the orderBy function to sort DataFrames in PySpark. This tutorial will guide you through the process of using orderBy with practical examples and explanations.

Sample Data

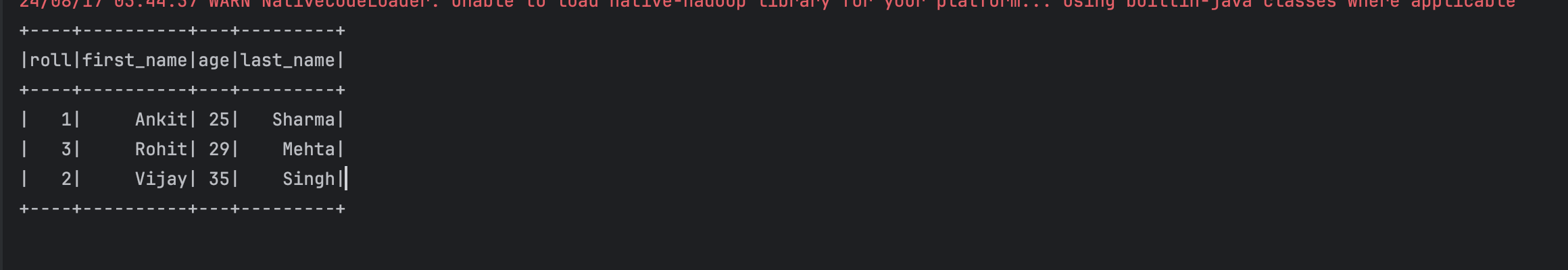

| Roll | First Name | Age | Last Name |

|---|---|---|---|

| 1 | Ankit | 25 | Sharma |

| 2 | Vijay | 35 | Singh |

| 3 | Rohit | 29 | Mehta |

PySpark Code Example

Here’s how you can sort the above DataFrame using PySpark:

from pyspark.sql import SparkSession

from pyspark.sql import Row

from pyspark.sql.types import StructType, StructField, IntegerType, StringType

from pyspark.sql.functions import col

# Initialize Spark session

spark = SparkSession.builder \

.appName("Order by or sort column in a Spark DataFrame") \

.master("local") \

.getOrCreate()

# Define the schema

schema = StructType([

StructField("roll", IntegerType(), True),

StructField("first_name", StringType(), True),

StructField("age", IntegerType(), True),

StructField("last_name", StringType(), True)

])

# Create data

data = [

Row(1, "Ankit", 25, "Sharma"),

Row(2, "Vijay", 35, "Singh"),

Row(3, "Rohit", 29, "Mehta")

]

# Parallelize the data

rdd = spark.sparkContext.parallelize(data)

# Create DataFrame

testDF = spark.createDataFrame(rdd, schema)

# Order DataFrame by age

transformedDF = testDF.orderBy(col("age"))

# Show results

transformedDF.show()

This will sort the DataFrame by the age column in ascending order.

# Order DataFrame by age in descending order

transformedDF = testDF.orderBy(col("age").desc())

# Show results

transformedDF.show()

By using the orderBy function, you can easily sort your PySpark DataFrames according to the specific requirements of your analysis.