Using the map Function in PySpark - Comprehensive Guide

Understanding the map Function in PySpark

The map function is one of the fundamental transformations you can apply to Resilient Distributed Datasets (RDDs) in PySpark. This function allows you to apply a function to each element of the RDD, resulting in a new RDD containing the transformed elements.

What is the map Function in PySpark?

The map function in PySpark is used to apply a transformation to each element of an RDD. The function takes a lambda function or a named function as an argument, processes each element, and returns a new RDD with the transformed data. This is particularly useful in scenarios where you need to perform operations such as data cleaning, conversion, or any element-wise transformation.

Using the map Function in PySpark

Here’s how you can use the map function in PySpark:

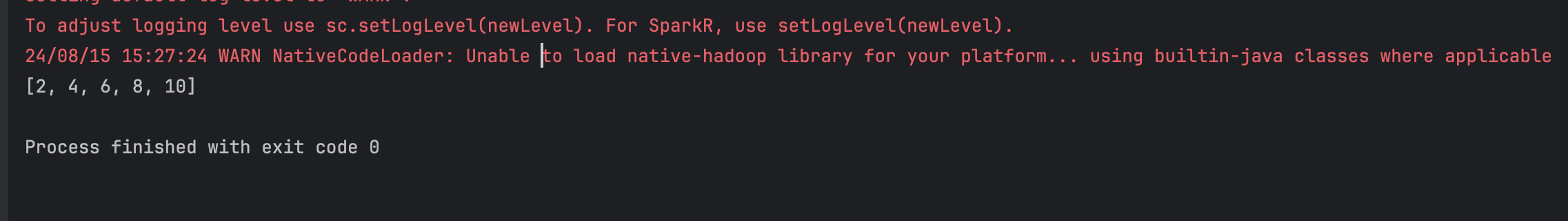

from pyspark.sql import SparkSession

from pyspark.sql.types import StructType, StructField, StringType

spark_session=SparkSession.builder.master("local").appName("simple map example Pyspark").getOrCreate();

data = [1, 2, 3, 4, 5]

rdd = spark_session.sparkContext.parallelize(data)

squared_rdd = rdd.map(lambda ele: ele * 2)

print(squared_rdd.collect())

Passing a function to PySpark Map - RDD

Here’s how you can use the map function in PySpark:

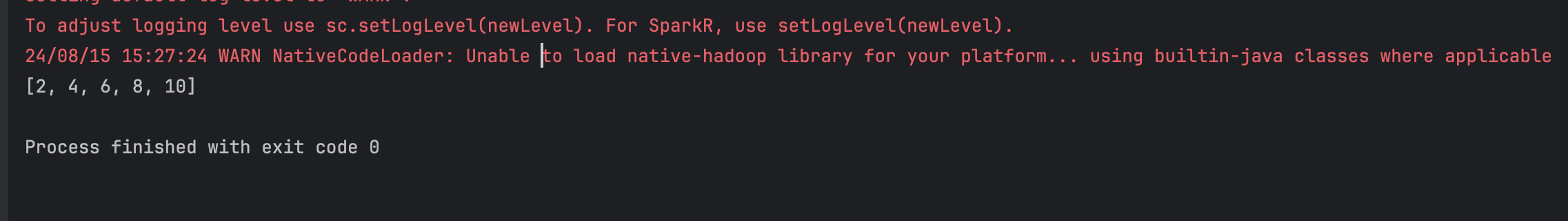

def square(ele):

val=ele*2

return val

data = [1, 2, 3, 4, 5]

rdd = spark_session.sparkContext.parallelize(data)

squared_rdd = rdd.map(square)

print(squared_rdd.collect())

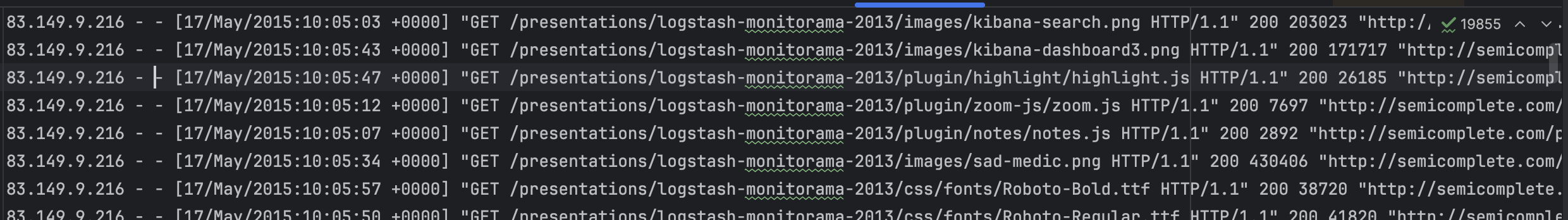

Real-World Example: Processing Log file

Let’s look at the below log file , we need to extract IP address from it. Please click here to download data and try this on your machine.

from pyspark.sql import SparkSession

spark_session=SparkSession.builder.master("local").appName("process log file using pyspark RDD").getOrCreate();

raw_rdd=spark_session.sparkContext.textFile("/Users/apple/PycharmProjects/pyspark/data/text/log.txt")

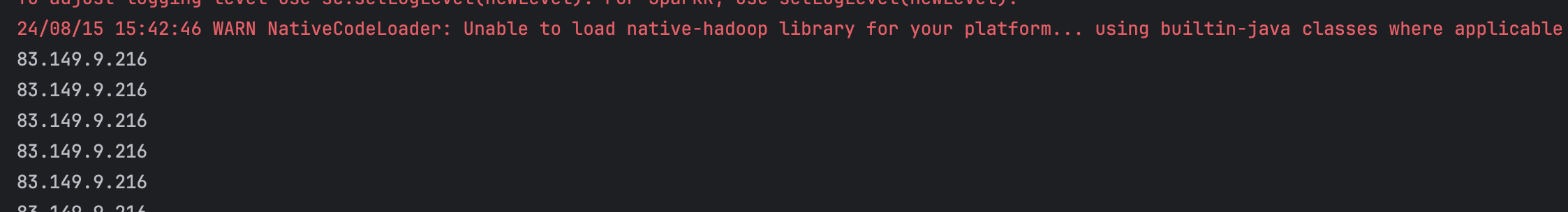

rdd=raw_rdd.map( lambda line: line.split('-')[0] )

for line in rdd.collect():

print(line)

Output:

Performance Considerations

While the map function is powerful, it’s important to be mindful of the potential performance implications when working with large datasets. Since the map function executes the transformation on each element of the RDD, ensuring that the transformation function is efficient is crucial for minimizing processing time.

Conclusion

The map function is an essential tool in the PySpark arsenal, enabling developers to apply transformations across RDDs with ease. By understanding how to use map effectively, you can unlock powerful data processing capabilities in your PySpark applications.