How to Use union in PySpark - Combining DataFrames

Using union in PySpark

Combining DataFrames is a common operation in data processing. In Apache PySpark, you can use the union function to merge two DataFrames with the same schema using . This tutorial will guide you through the process of using this function with practical examples and explanations.

1. Creating Sample DataFrames

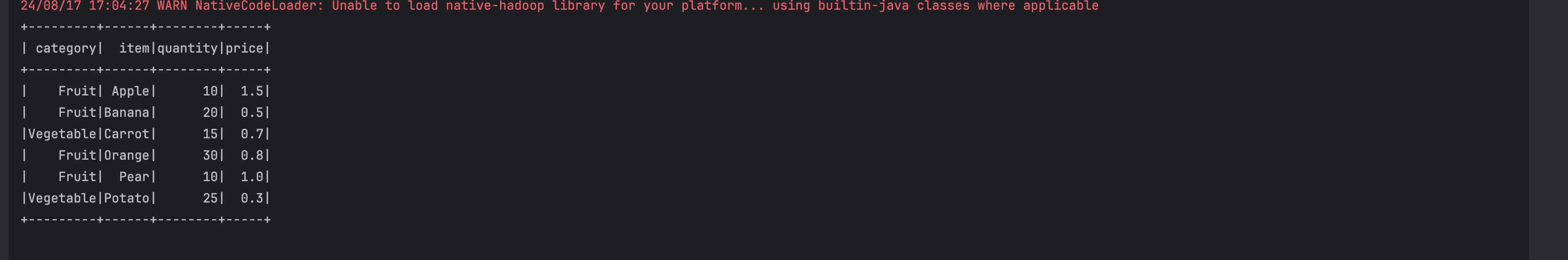

| Category | Item | Quantity | Price |

|---|---|---|---|

| Fruit | Apple | 10 | 1.5 |

| Fruit | Banana | 20 | 0.5 |

| Vegetable | Carrot | 15 | 0.7 |

| Category | Item | Quantity | Price |

|---|---|---|---|

| Fruit | Orange | 30 | 0.8 |

| Fruit | Pear | 10 | 1.0 |

| Vegetable | Potato | 25 | 0.3 |

2. Importing Necessary Libraries

Before we can use union, we need to import the necessary libraries:

from pyspark.sql import SparkSession from pyspark.sql.types import DoubleType, IntegerType, StringType, StructField, StructType from pyspark.sql import Row

3. Performing the union Operation

Now that we have our DataFrames, we can combine them using the union function:

combined_df = df1.union(df2)

Complete Code

from pyspark.sql import SparkSession

from pyspark.sql.types import DoubleType, IntegerType, StringType, StructField, StructType

from pyspark.sql import Row

# Initialize SparkSession

spark = SparkSession.builder \

.appName("Use union in PySpark") \

.master("local") \

.getOrCreate()

# Define the schema

schema = StructType([

StructField("category", StringType(), True),

StructField("item", StringType(), True),

StructField("quantity", IntegerType(), True),

StructField("price", DoubleType(), True)

])

# Create the data for the first DataFrame

data1 = [

Row("Fruit", "Apple", 10, 1.5),

Row("Fruit", "Banana", 20, 0.5),

Row("Vegetable", "Carrot", 15, 0.7)

]

# Create the data for the second DataFrame

data2 = [

Row("Fruit", "Orange", 30, 0.8),

Row("Fruit", "Pear", 10, 1.0),

Row("Vegetable", "Potato", 25, 0.3)

]

# Create the DataFrames

rdd1 = spark.sparkContext.parallelize(data1)

df1 = spark.createDataFrame(rdd1, schema)

rdd2 = spark.sparkContext.parallelize(data2)

df2 = spark.createDataFrame(rdd2, schema)

# Perform the union operation

combined_df = df1.union(df2)

# Show the result

combined_df.show()

4. Output

In this tutorial, we have demonstrated how to use the union function in PySpark with to combine two DataFrames with the same schema. This is a powerful tool for data integration and processing tasks..