Read a TextFile Using PySpark

How to Read a Text File Using PySpark with Example

Reading a text file in PySpark is straightforward with the textFile method, which returns an RDD. To obtain a DataFrame, you should use spark.read.text instead. This method loads the text file into a DataFrame, making it easier to work with structured data. It supports various file sources and allows for efficient data processing and analysis. This approach simplifies handling and manipulating text data within Spark applications.

from pyspark.sql import SparkSession

spark_session = SparkSession.builder.master("local").appName("Read text file using pyspark with example").getOrCreate()

textfile_df_with_schema=spark_session.read.text("/Users/apple/PycharmProjects/pyspark/data/text/data.txt")

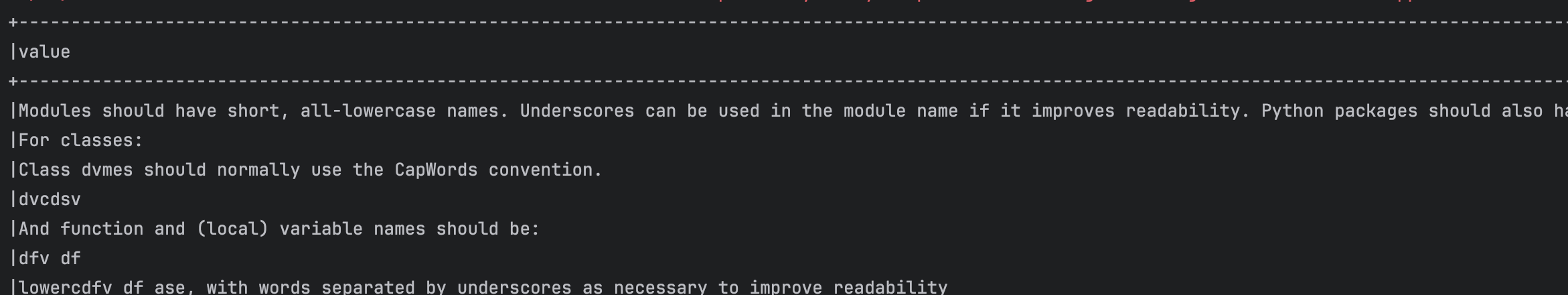

textfile_df_with_schema.show(truncate=False)

Output:

Below list contains some most commonly used options while reading a csv file

- lineSep: The 'lineSep' option allows you to define the line separator used in the file. By specifying this setting, you can control how lines are separated in the data file. This is useful for ensuring compatibility with various data processing tools.

- wholetext: The 'wholetext' option enables reading each input file as a single row. When this option is used, the entire file content is treated as one large record. This is useful for scenarios where files contain large blocks of text or when you need to process each file as a single entity

- compression: Specifies the compression codec to use when saving files. This setting helps optimize file size and storage efficiency. Common options include none, snappy, gzip, and more. Selecting the right codec can improve data handling and processing performance. Adjust this setting based on your needs for compression and speed.