Pyspark read csv from local file system

How to read CSV with header using PySpark

CSV files are a popular format for data storage, and Spark offers robust tools for handling them efficiently. In this guide, we’ll explore how to read a CSV file using PySpark. We'll cover setting up your Spark session, loading the CSV file into a DataFrame, and performing basic data operations. By the end, you'll be equipped to handle CSV files effectively in your PySpark applications. Let’s dive into the process step by step.

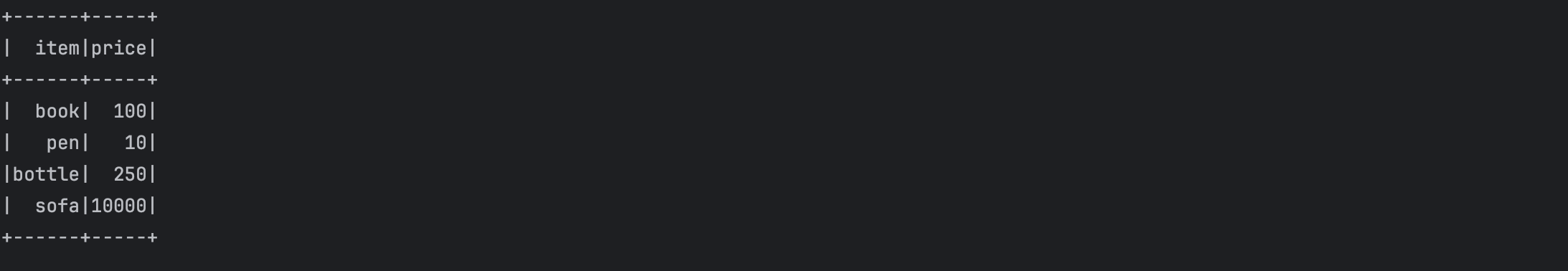

Item Prices

| Item | Price |

|---|---|

| Book | 100 |

| Pen | 10 |

| Bottle | 250 |

| Sofa | 10000 |

Read CSV with header using PySpark

from pyspark import Row

from pyspark.sql import SparkSession

from pyspark.sql.types import StructType, StructField, StringType, IntegerType

spark_session = SparkSession.builder.master("local").appName("testing").getOrCreate()

raw_df_with_header=spark_session.read.option("header",True).csv("/Users/apple/PycharmProjects/pyspark/data/csv/data.csv")

raw_df_with_header.show()

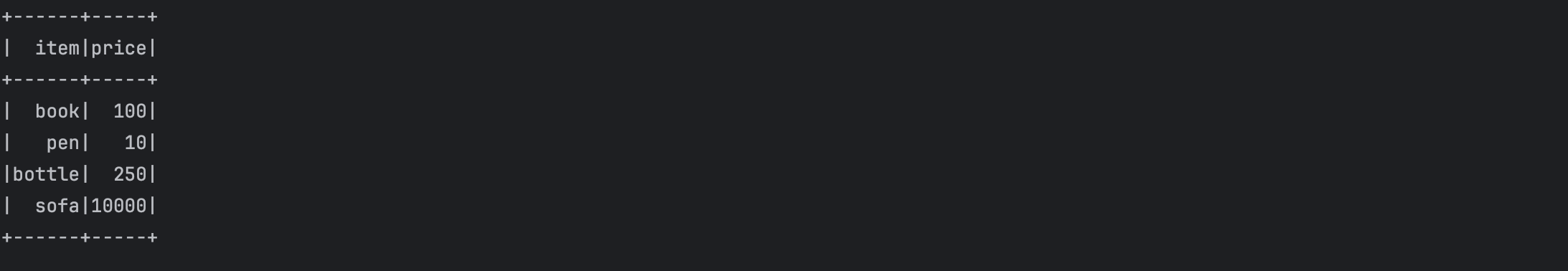

Output:

Read CSV with schema using PySpark

schema=StructType( [StructField("item",StringType(),True),StructField("price",IntegerType(),True)])

csv_df_with_schema=spark_session.read.option("header",False).option("schema",schema).csv("/Users/apple/PycharmProjects/pyspark/data/csv/data_without_header.csv")

raw_df_with_header.show()

Output:

Below list contains some most commonly used options while reading a csv file

- inferSchema : Infers the input schema automatically from data.

- multiLine: Parse one record, which may span multiple lines, per file. CSV built-in functions ignore this option.

- delimiter: custom delimiter other than coma.

Fully Detailed Code Example

from pyspark import Row

from pyspark.sql import SparkSession

from pyspark.sql.types import StructType, StructField, StringType, IntegerType

spark_session = SparkSession.builder.master("local").appName("testing").getOrCreate()

schema=StructType( [StructField("item",StringType(),True),StructField("price",IntegerType(),True)])

raw_df_with_header=spark_session.read.option("header",True).csv("/Users/apple/PycharmProjects/pyspark/data/csv/data.csv")

raw_df_with_header.show()

csv_df_with_schema=spark_session.read.option("header",False).option("schema",schema).csv("/Users/apple/PycharmProjects/pyspark/data/csv/data_without_header.csv")

raw_df_with_header.show()