How to use groupBy with multiple columns in PySpark

How to Use groupBy in PySpark: A Guide to Grouping and Aggregating Data

Grouping and aggregating data is essential in data analysis. In Apache PySpark, the `groupBy` function allows you to efficiently group data within a DataFrame. This tutorial will walk you through how to use the groupBy function, providing practical examples and detailed explanations to help you master this fundamental technique.

Sample Data

| Roll | First Name | Age | Last Name | subject | Marks |

|---|---|---|---|---|---|

| 1 | Rahul | 18 | Yadav | PHYSICS | 80 |

| 1 | Rahul | 18 | Yadav | CHEMISTRY | 77 |

| 1 | Rahul | 18 | Yadav | BIOLOGY | 70 |

| 2 | Vinay | 17 | kumar | PHYSICS | 80 |

| 2 | Vinay | 17 | kumar | CHEMISTRY | 77 |

| 2 | Vinay | 17 | kumar | BIOLOGY | 66 |

Step 1: Import Required Libraries

First, you need to import the necessary libraries:

from pyspark.sql import SparkSession, functions as F from pyspark.sql.types import IntegerType, StringType, StructField, StructType from pyspark.sql import Row

Step 2: Create Sample DataFrame

For demonstration purposes, let's create a sample DataFrame:

# Define the schema

schema = StructType([

StructField("roll", IntegerType(), True),

StructField("first_name", StringType(), True),

StructField("age", IntegerType(), True),

StructField("last_name", StringType(), True),

StructField("subject", StringType(), True),

StructField("Marks", IntegerType(), True)

])

# Create the data

data = [

Row(1, "rahul", 18, "yadav", "PHYSICS", 80),

Row(1, "rahul", 18, "yadav", "CHEMISTRY", 77),

Row(1, "rahul", 18, "yadav", "BIOLOGY", 70),

Row(2, "Vinay", 17, "kumar", "PHYSICS", 80),

Row(2, "Vinay", 17, "kumar", "CHEMISTRY", 77),

Row(2, "Vinay", 17, "kumar", "BIOLOGY", 66)

]

# Create the DataFrame

rdd = spark.sparkContext.parallelize(data)

df = spark.createDataFrame(rdd, schema)

Step 3: Use groupBy for single column

grouped_df = df.groupBy("roll").agg(F.sum("Marks").alias("total_marks"))

Step 3: Use Multiple Column in groupby

grouped_df = df.groupBy("roll","first_name","last_name").agg(F.sum("Marks").alias("total_marks"))

Complete Code

from pyspark.sql import SparkSession, functions as F

from pyspark.sql.types import IntegerType, StringType, StructField, StructType

from pyspark.sql import Row

# Initialize SparkSession

spark = SparkSession.builder \

.appName("Group by in PySpark DataFrame") \

.master("local") \

.getOrCreate()

# Define the schema

schema = StructType([

StructField("roll", IntegerType(), True),

StructField("first_name", StringType(), True),

StructField("age", IntegerType(), True),

StructField("last_name", StringType(), True),

StructField("subject", StringType(), True),

StructField("Marks", IntegerType(), True)

])

# Create the data

data = [

Row(1, "rahul", 18, "yadav", "PHYSICS", 80),

Row(1, "rahul", 18, "yadav", "CHEMISTRY", 77),

Row(1, "rahul", 18, "yadav", "BIOLOGY", 70),

Row(2, "Vinay", 17, "kumar", "PHYSICS", 80),

Row(2, "Vinay", 17, "kumar", "CHEMISTRY", 77),

Row(2, "Vinay", 17, "kumar", "BIOLOGY", 66)

]

# Create the DataFrame

rdd = spark.sparkContext.parallelize(data)

df = spark.createDataFrame(rdd, schema)

# Perform groupBy and aggregation

grouped_df = df.groupBy("roll").agg(F.sum("Marks").alias("total_marks"))

# Show the result

grouped_df.show()

# Stop the SparkSession

spark.stop()

grouped_df = df.groupBy("roll","first_name","last_name").agg(F.sum("Marks").alias("total_marks"))

Complete Code

from pyspark.sql import SparkSession, functions as F

from pyspark.sql.types import IntegerType, StringType, StructField, StructType

from pyspark.sql import Row

# Initialize SparkSession

spark = SparkSession.builder \

.appName("Group by in PySpark DataFrame") \

.master("local") \

.getOrCreate()

# Define the schema

schema = StructType([

StructField("roll", IntegerType(), True),

StructField("first_name", StringType(), True),

StructField("age", IntegerType(), True),

StructField("last_name", StringType(), True),

StructField("subject", StringType(), True),

StructField("Marks", IntegerType(), True)

])

# Create the data

data = [

Row(1, "rahul", 18, "yadav", "PHYSICS", 80),

Row(1, "rahul", 18, "yadav", "CHEMISTRY", 77),

Row(1, "rahul", 18, "yadav", "BIOLOGY", 70),

Row(2, "Vinay", 17, "kumar", "PHYSICS", 80),

Row(2, "Vinay", 17, "kumar", "CHEMISTRY", 77),

Row(2, "Vinay", 17, "kumar", "BIOLOGY", 66)

]

# Create the DataFrame

rdd = spark.sparkContext.parallelize(data)

df = spark.createDataFrame(rdd, schema)

# Perform groupBy and aggregation

grouped_df = df.groupBy("roll").agg(F.sum("Marks").alias("total_marks"))

# Show the result

grouped_df.show()

# Stop the SparkSession

spark.stop()

That's it! You've successfully applied withColumnRenamed to a DataFrame in PySpark using .

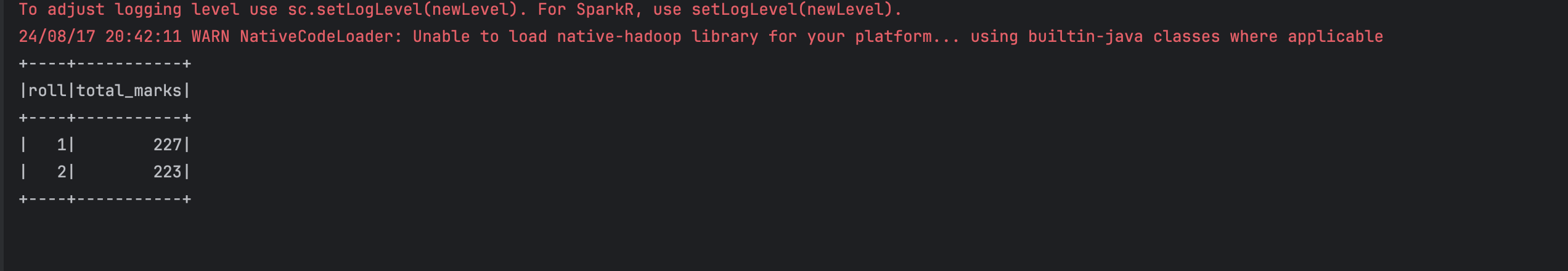

Output