Using the mapValues Function in PySpark - Comprehensive Guide

Understanding the mapValues Function in PySpark

The mapValues function in PySpark is a powerful transformation used on pair RDDs (key-value pairs). It allows you to apply a function to each value in the RDD while keeping the key intact. This operation is essential for many data processing tasks, particularly when you need to transform values without affecting their associated keys.

What is the mapValues Function in PySpark?

The mapValues function in PySpark is specifically designed for key-value pairs. Unlike the map function, which can modify both keys and values, mapValues only alters the values, ensuring that the keys remain unchanged. This makes it an ideal choice for scenarios where the key structure needs to be preserved.

Using the mapValues Function in PySpark

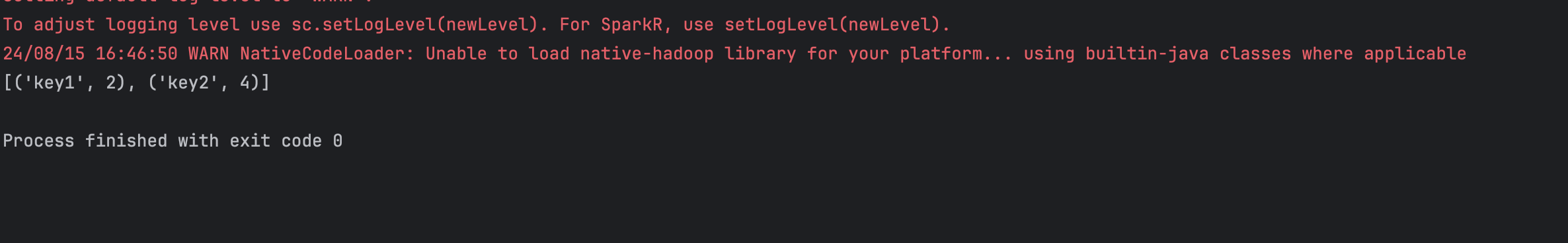

Here’s a basic example of how to use the mapValues function:

# Example of using mapValues to modify values in key-value pairs

data = [("key1", 1), ("key2", 2)]

rdd = spark_session.sparkContext.parallelize(data)

mapped_rdd = rdd.mapValues(lambda x: x * 2)

print(mapped_rdd.collect())

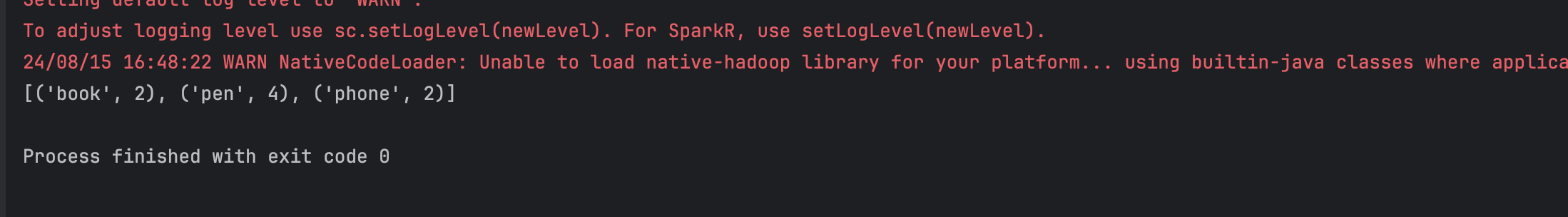

Real-World Example: Adjusting item quantity

Imagine you have a product list with quantity that need to be adjusted. The mapValues function can be used to increase or decrease the quantity while keeping the product names intact:

from pyspark.sql import SparkSession

spark_session = SparkSession.builder.master("local").appName("mapValues in pyspark").getOrCreate()

# Example of using mapValues to change quantity of items in key-value pairs

item_list = [("book", 1), ("pen", 2), ("phone", 1)]

rdd = spark_session.sparkContext.parallelize(item_list)

mapped_rdd = rdd.mapValues(lambda item_value: item_value * 2)

print(mapped_rdd.collect())

Performance Considerations

The mapValues function is efficient because it operates on the values without affecting the partitioning of the RDD. However, it's important to consider the nature of the function you apply to the values, as complex operations may still lead to increased computational overhead.

Conclusion

The mapValues function is a crucial tool in PySpark for transforming the values in key-value pairs while preserving the keys. Its efficiency and targeted functionality make it a go-to option for many data transformation tasks in large-scale data processing.