Read a Parquet File Using PySpark

How to Read a Parquet File Using PySpark with Example

The Parquet format is a highly efficient columnar storage format designed for big data applications. It is widely used in the analytics ecosystem due to its performance advantages. Parquet is especially popular in conjunction with Apache Spark.

Its columnar structure allows for efficient data compression and encoding. This format improves query performance by reading only the necessary columns. It is also optimized for storage and retrieval operations. As a result, Parquet is a preferred choice for handling large-scale data processing tasks. Its integration with Spark makes it a powerful tool for data analysis.

Why the Parquet format is important in Spark?

- Efficient Data Compression: Parquet files are optimized for storage efficiency through advanced compression techniques. This reduces the size of the data, saving disk space and improving I/O efficiency. The format enhances performance by minimizing storage costs and accelerating data retrieval. Parquet’s compression capabilities are particularly beneficial for handling large datasets.

- Columnar Storage: Parquet uses a columnar format instead of row-based storage. This approach is particularly advantageous for analytical queries that focus on specific columns rather than whole rows. By storing data column-wise, Parquet enhances performance in read-heavy operations. This makes it highly effective for processing large datasets and improving query efficiency.

- Schema Evolution: Parquet supports schema evolution, allowing you to add or remove columns without impacting existing data. This flexibility is essential for managing large datasets that evolve over time. It ensures that changes to the schema do not disrupt ongoing data processing. Schema evolution in Parquet facilitates easier data management and adaptability.

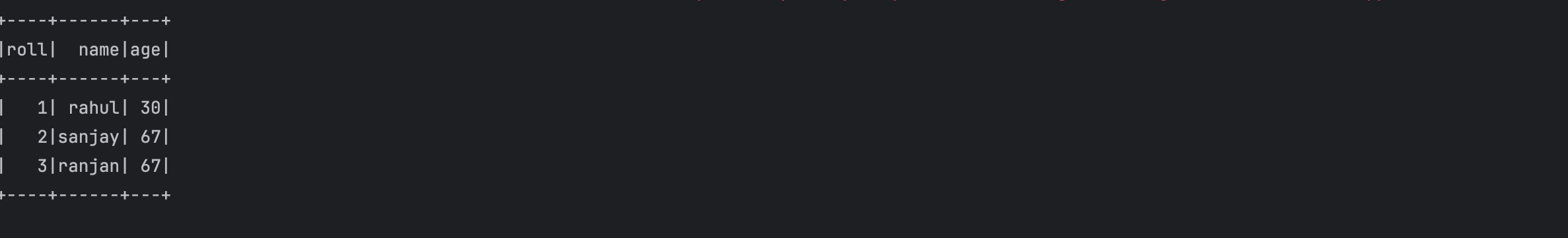

Sample Data

| Roll | Name | Age |

|---|---|---|

| 1 | Rahul | 30 |

| 2 | Sanjay | 67 |

| 3 | Ranjan | 67 |

from pyspark.sql import SparkSession

spark_session = SparkSession.builder.master("local").appName("testing").getOrCreate()

parquet_df_with_schema=spark_session.read.parquet("/Users/apple/PycharmProjects/pyspark/data/parquet/user.parquet")

parquet_df_with_schema.show()

Output:

Below list contains some most commonly used options while reading a csv file

- mergeSchema : This setting determines whether schemas from all Parquet part-files should be merged. Enabling mergeSchema allows Spark to combine schemas from multiple files, accommodating variations in the schema across files. This feature is useful for handling datasets with evolving or inconsistent schemas

- compression: Specifies the compression codec to use when saving files. This can be one of several known case-insensitive options, including none, uncompressed, snappy, gzip, lzo, brotli, lz4, or zstd. Choosing the appropriate codec helps optimize file size and processing performance. Compression codecs improve storage efficiency and data handling based on your needs.