Pyspark print Word frequency

Word count program in pyspark - Word frequency

Word count is one of the most common tasks in data processing, often used as a simple example to introduce big data processing frameworks like Apache Spark. PySpark, the Python API for Spark, allows you to leverage the power of distributed computing to perform word count operations on large datasets efficiently. In this blog post, we'll walk you through creating a word count program in PySpark that calculates word frequency.

Text file contents

| i like pyspark program |

| pyspark is very simple |

| i like pyspark program |

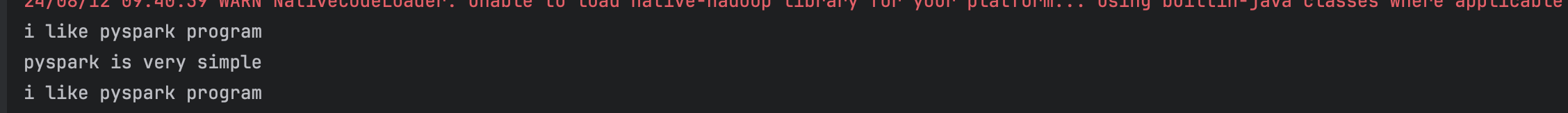

1.Read the text file and print its content

rdd=spark_session.sparkContext.textFile("/Users/apple/PycharmProjects/pyspark/data/text/word_count.txt")

data=rdd.collect()

for x in data:

print(x)

Output:

2. Split the line using space(' ') and use flatMap

df_split=rdd.flatMap(lambda data : data.split(' '))

data=df_split.collect()

for x in data:

print(x)

Output:

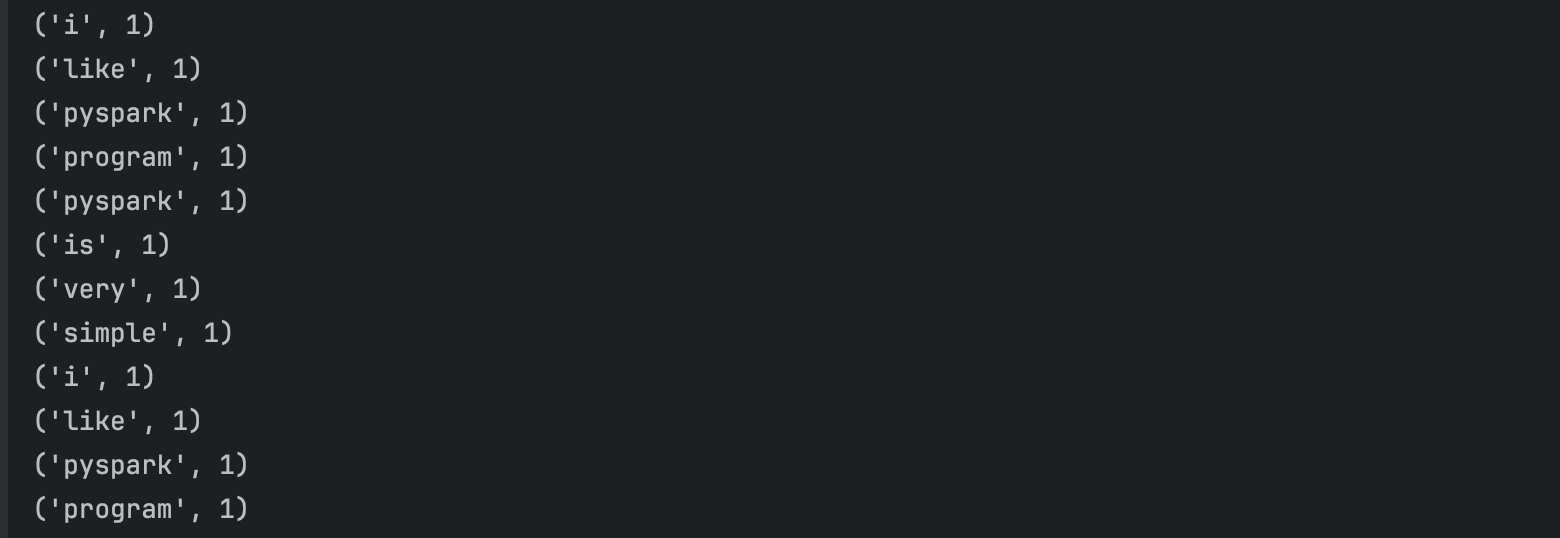

3. Convert Previous rdd into a key-value pair rdd

To count the frequency of each word, map each word to a key-value pair where the key is the word and the value is 1. This can be done using the map transformation.

df_pair=df_split.map( lambda value : (value,1)) data=df_pair.collect() for x in data: print(x)

Output:

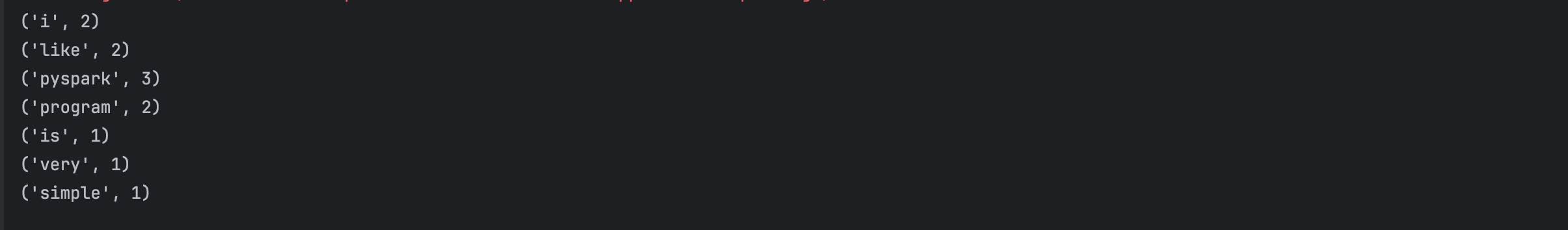

Use reduceByKey

Now, sum the values for each key (word) using the reduceByKey transformation. This will aggregate the counts for each word.

df_count=df_pair.reduceByKey(lambda x,y: x+y ) df_count=df_pair.reduceByKey(add) # this works as well data=df_count.collect() for x in data: print(x)

Output:

Fully Detailed Code Example

from operator import add

from pyspark.sql importSparkSession

spark_session:SparkSession=SparkSession.builder.master("local").appName("world_count").getOrCreate()

rdd=spark_session.sparkContext.textFile("/Users/apple/PycharmProjects/pyspark/data/text/word_count.txt")

data=rdd.collect()

for x in data:

print(x)

df_split=rdd.flatMap(lambda data : data.split(' '))

data=df_split.collect()

for x in data:

print(x)

df_pair=df_split.map( lambda value : (value,1))

data=df_pair.collect()

for x in data:

print(x)

df_count=df_pair.reduceByKey(lambda x,y: x+y )

#df_count=df_pair.reduceByKey(add) - this works as well

data=df_count.collect()

for x in data:

print(x)