Using the groupByKey Function in PySpark - Comprehensive Guide

Understanding the groupByKey Function in PySpark

The groupByKey function in PySpark is a fundamental operation used to group data in an RDD by key. It is a powerful tool for processing large datasets, enabling you to organize data and perform aggregations efficiently.

What is the groupByKey Function in PySpark?

The groupByKey function groups all values with the same key into a single sequence. This function returns an RDD of pairs where each key corresponds to an iterable of values. While it is useful, it's essential to understand that groupByKey can be resource-intensive as it shuffles data across the network.

Using the groupByKey Function in PySpark

Here’s a basic example of how to use the groupByKey function:

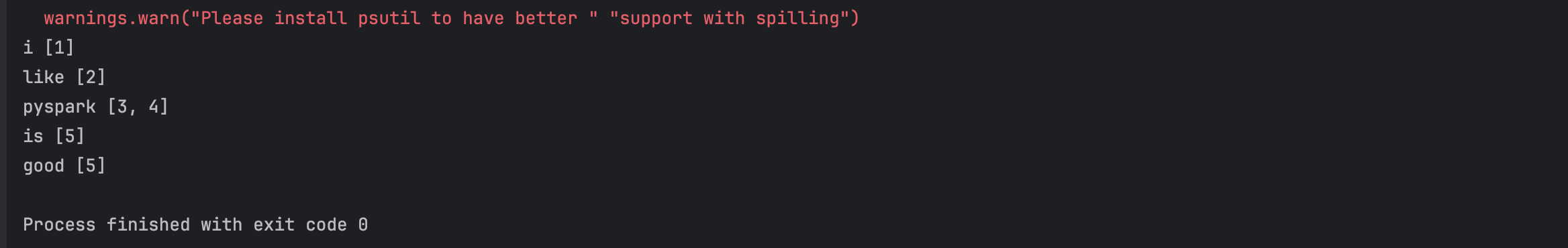

# Example of using groupByKey to group data by key

from pyspark.sql import SparkSession

spark_session = SparkSession.builder.master("local").appName("testing").getOrCreate()

data = [("i", 1), ("like", 2), ("pyspark", 3), ("pyspark", 4), ("is", 5),("good",5)]

rdd = spark_session.sparkContext.parallelize(data)

grouped_rdd = rdd.groupByKey()

for key, values in grouped_rdd.collect():

print(key, list(values))

Real-World Example: Aggregating Sales Data

Consider a scenario where you have sales data, and you want to aggregate the total sales by product:

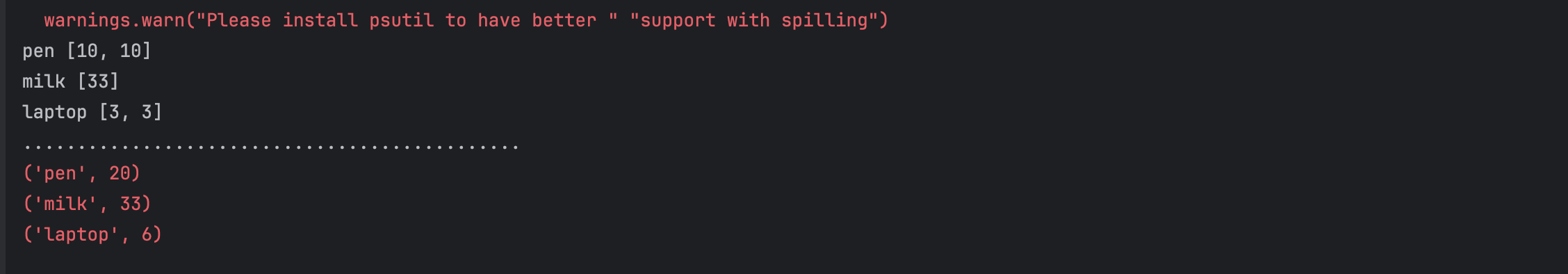

# Example of using groupByKey to group data by key

from pyspark.sql import SparkSession

spark_session = SparkSession.builder.master("local").appName("groupByKey in rdd pyspark with example").getOrCreate()

data = [("pen", 10), ("milk", 33),("laptop",3),("pen", 10),("laptop",3)]

rdd = spark_session.sparkContext.parallelize(data)

grouped_rdd = rdd.groupByKey()

for key, values in grouped_rdd.collect():

print(key, list(values))

print("..............................................")

grouped_rdd_sum=rdd.groupByKey().mapValues(lambda list : sum(list))

grouped_rdd_sum.foreach(lambda data:print(data))

Performance Considerations

Using groupByKey can be inefficient when dealing with large datasets because it involves a lot of shuffling. As an alternative, you might consider using reduceByKey, which performs the aggregation in a more distributed manner, reducing the amount of data shuffled across the network.

Conclusion

The groupByKey function is a versatile tool in PySpark that allows you to group data by key, enabling complex aggregations and data transformations. However, understanding its performance implications and considering alternatives like reduceByKey can help optimize your PySpark applications.